|

Shi (Billy) Chen I'm a final-year undergraduate at Fudan University studying Computer Science and Technology, and an exchange student at UC Berkeley. I'm the Co-founder & CTO of Intuition Core Inc., an AI & Robotics startup developing infrastructure solutions to accelerate real-world robot deployment. My research interests span computer vision, 3D generation, robotics, and embodied AI. I've been fortunate to work with amazing researchers at UC Berkeley, University of Chicago, University of Cambridge, and Johns Hopkins University. I'm honored to be funded by the National Science Foundation of China under its Youth Fund (one of the highest honors for undergraduates in China; ~120 students nationwide annually). My mission: I'm driven by the vision of bringing intelligent robots from research labs into the real world, creating transformative solutions that meaningfully improve human lives and unlock new possibilities for society. |

|

News

|

Entrepreneurial Experience |

|

Intuition Core Inc.

Co-founder & CTO, May 2025 - Present Berkeley & San Francisco, CA Co-founded an AI & Robotics startup developing infrastructure solutions to accelerate real-world robot deployment. Designed a novel Masked Autoencoders (MAE) based world model pretraining architecture achieving 15%+ downstream policy training efficiency. Built ultra-low latency (<100ms) teleoperation systems over long distances (Hawaii-SF, Shanghai-SF). Secured $950K in pre-seed funding from Founders Fund and Fen Venture at $24M post-money valuation cap. Demo video of our Vision-Language-Action (VLA) model trained with our customized data collection pipeline and optimized with our world model pre-training method: |

ResearchI'm interested in computer vision, 3D generation, robotics, and embodied AI. My research focuses on developing methods that enable machines to perceive, understand, and interact with the physical world. Representative works are highlighted. |

|

|

Semantic Segmentation and Completion for LiDAR Signals

Shi Chen, Weifeng Ge Advisor: Prof. Weifeng Ge, Fudan University In preparation for IEEE TPAMI, Sept. 2024 - Present project page (coming soon) Proposed a 3D latent diffusion model with multi-stage data augmentation for sparse LiDAR datasets. Designed a multinomial discrete diffusion model achieving 39 mIoU, surpassing previous SOTA (37.9 mIoU). Funded by the National Science Foundation of China Youth Fund. Currently deploying on Unitree G1 humanoid and Go1 robot dog for outdoor navigation. |

|

|

Mesh & Texture Optimization for 3D Generation

Shi Chen, Rana Hanocka Advisor: Prof. Rana Hanocka, University of Chicago Individual Summer Research, June - Aug. 2025 project page (coming soon) Extended the Continuous Remeshing pipeline to generate high-quality meshes from single-frame images. Introduced vertex color optimization with novel loss terms. Demonstrated improved mesh quality over baseline Marching Cube algorithm with >70% preference in crowdsourced user study. |

|

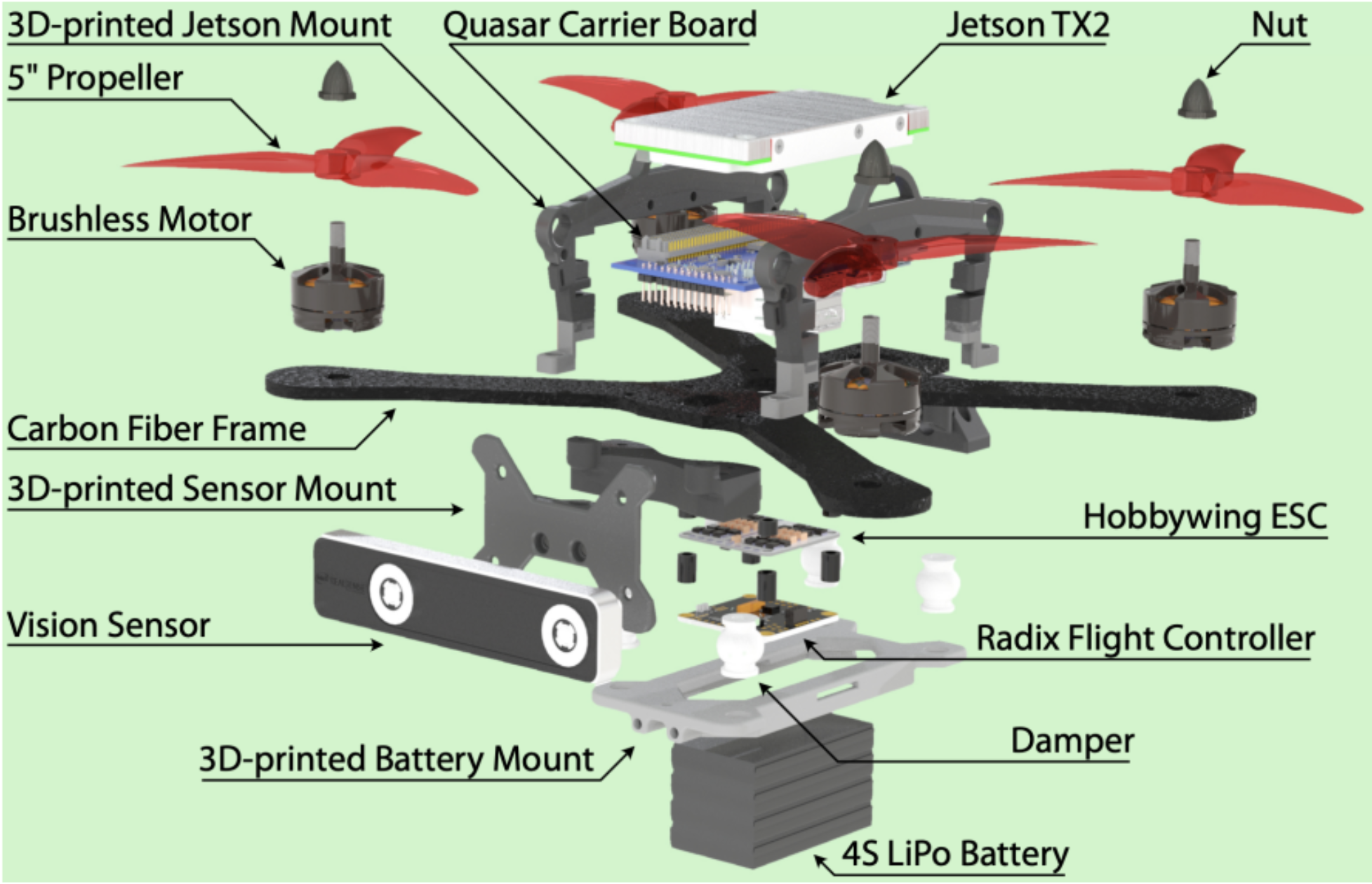

Flying in the Wild, No GPS

Shi Chen, Francesco Crivelli, Peiqi Liu, Siddharth Nath Advisor: Prof. Shankar Sastry, UC Berkeley Research Project, Apr. - June 2025 paper Built a custom quadrotor under the Agilicious framework with specialized hardware. Enabled real-time state estimation using SVO Pro algorithm for GPS-free navigation. Fine-tuned Qwen-2.5 3B VLM for onboard deployment on Jetson Orin Nano. Verified feasibility of onboard VLM deployment for real-time quadrotor navigation, reducing hardware costs by several hundred dollars. |

|

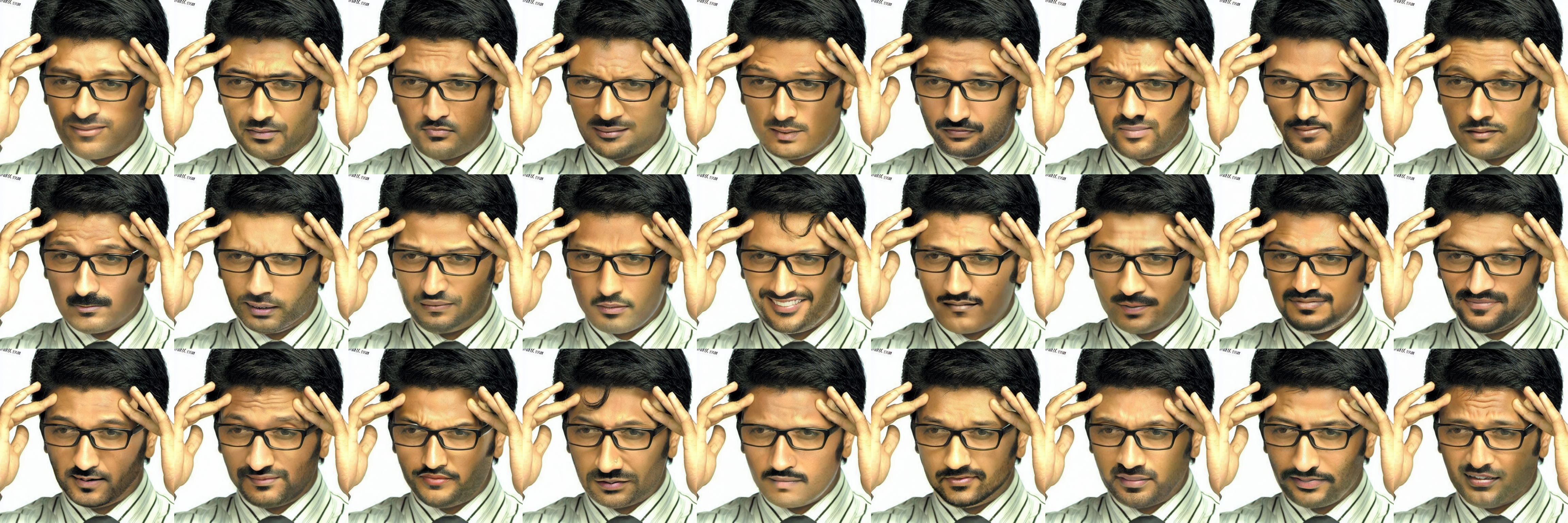

Hi-Fi: High-Quality Synthetic Hand-Over-Face Gestures Dataset with Multimodal Diffusion

Shi Chen, Marwa Mahmoud Advisor: Prof. Marwa Mahmoud, University of Cambridge IEEE International Conference on Automatic Face and Gesture Recognition (FG2025), July - Aug. 2024 paper Proposed a multimodal diffusion pipeline integrating ControlNet into Stable Diffusion, increasing MediaPipe Confidence scores from 0.248 to 0.556 (2.24x improvement). Created a synthetic dataset of 170,000+ images across 809 gesture types with 83.5% quality rating. Selected for Cambridge Summer Research Programme, graduating with First with Distinction. |

|

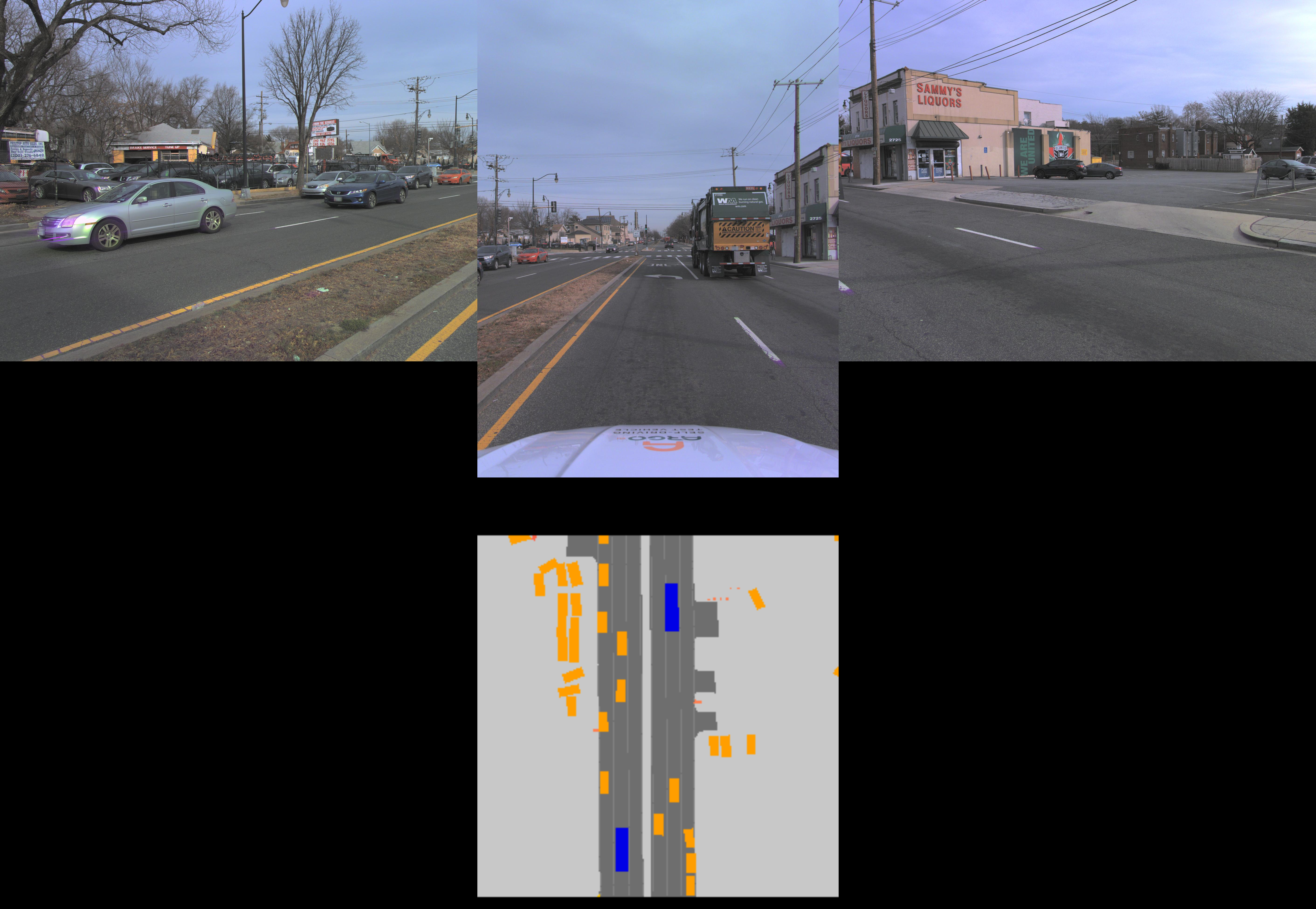

Reconstructing Streets and Augmenting Autonomous Driving

Shi Chen, Xingrui Wang Collaborator: Dr. Xingrui Wang, Johns Hopkins University Research Project, Jan. - July 2024 Developed a diffusion model to generate 3D bounding boxes and BEV perception data on NuScenes dataset. Combined synthetic input for MagicDrive to create temporally consistent RGB videos. Achieved 2-4% accuracy improvement on downstream tasks including BEV perception and 3D bounding box detection on BEVFusion. Used NeRF-based Nerfacto for controllable 3D scene rendering. |

Education |

|

|

University of California, Berkeley

Exchange Student, Jan. - Aug. 2025 GPA: 3.91/4.0 Courses: Programming Languages and Compilers, Robotic Manipulation and Interaction, Advanced Large Language Model Agents |

|

|

Fudan University

B.Eng. Computer Science and Technology, Sept. 2022 - July 2026 (expected) GPA: 93/100 (Major GPA: 3.80/4.0); Ranking: 11/91 Supervised by Prof. Weifeng Ge Honors: National Science Foundation of China Youth Fund (2024), Fudan University Scholarship for Academic Achievement: First with Distinction (2024-2025), Second Prize in China Undergraduate Mathematical Contest in Modeling (2023), Third Prize in Chinese Undergraduate Mathematics Competitions (2023) |

Other Selected Projects |

|

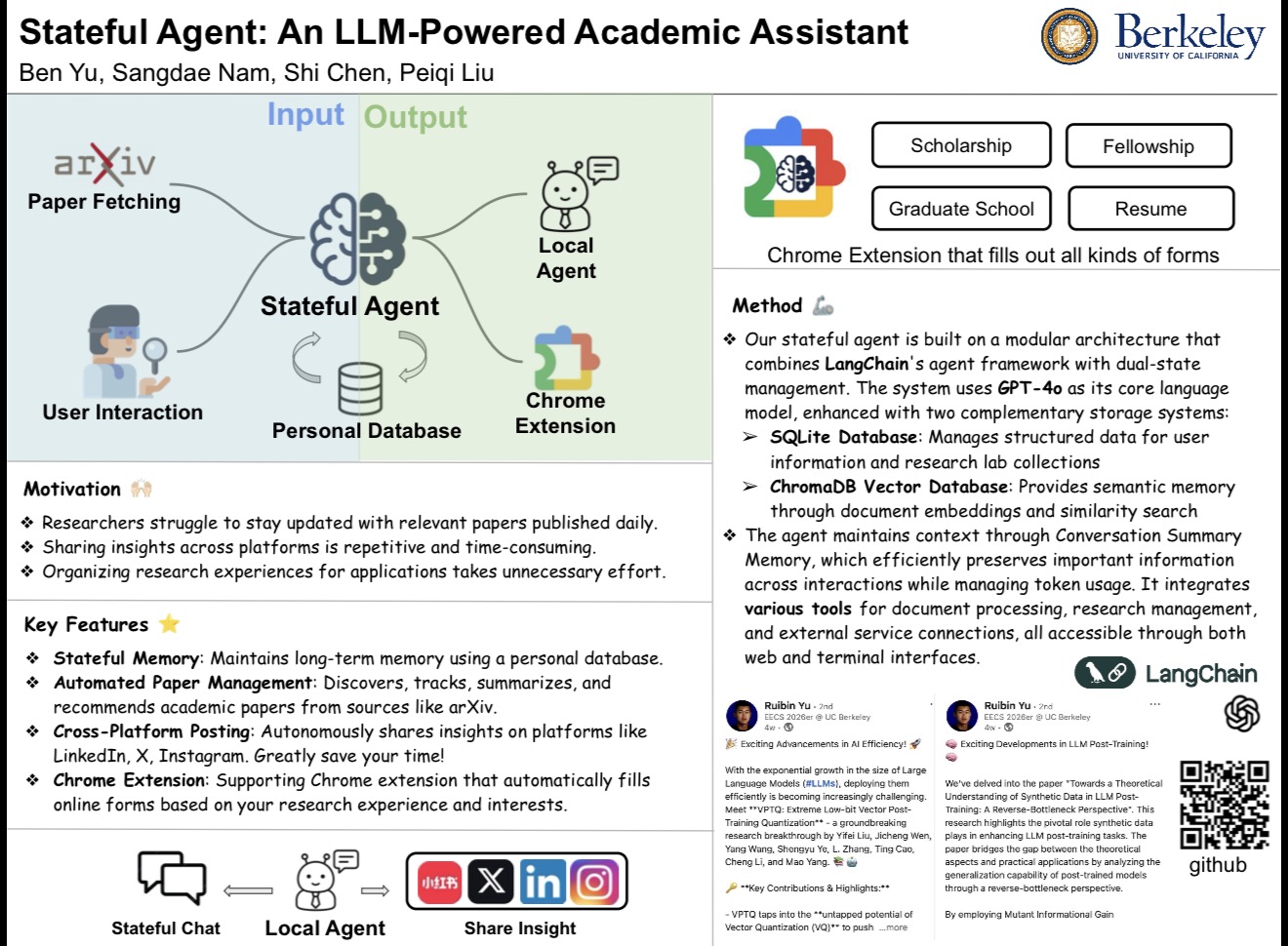

Stateful-Agent: A Cross-Platform LLM-Based Agent with Persistent Memory

Shi Chen and collaborators Course Instructor: Prof. Dawn Song, UC Berkeley Course Project, Apr. - June 2025 GitHub / Chrome Extension Developed a stateful LLM agent with persistent memory for academic research management. Built Chrome extension to auto-fill graduate school applications. Integrated automated paper discovery using LangChain + GPT-4o. Achieved 98% success rate in automated posting tasks. |

Skills & Technical Expertise

Programming Languages: C, C++, Python, OCaml, Lean (Theorem Proving), Verilog HDL, Pascal, MATLAB

|

|

Thanks to Jon Barron for the template. |